About me

I am a Ph.D. candidate in Electrical and Computer Engineering at New York University, advised by Prof. Brandon Reagen. My research explores the mathematical foundations of large language models (LLMs)—how information, geometry, and learning dynamics interact and shape the stability, efficiency, and scaling behavior of LLMs.

In particular, my work pursues three complementary thrusts: 1) representation integrity (entropy budgets, spectral utilization, stability regimes), 2) scientific foundations (information theory, inductive biases, scaling laws), and 3) high-dimensional learning dynamics (eigenspectra, weight manifolds, spectral geometry).

The long-term goal is to build first-principles frameworks for architecture-optimizer co-design in LLMs with an emphasis on representational integrity. This effort led to NerVE (ICLR 2026), an eigenspectral framework that quantify the nonlinear transformations of FFNs, and Spectral Scaling Laws for quantifying FFN width utilization (EMNLP 2025).

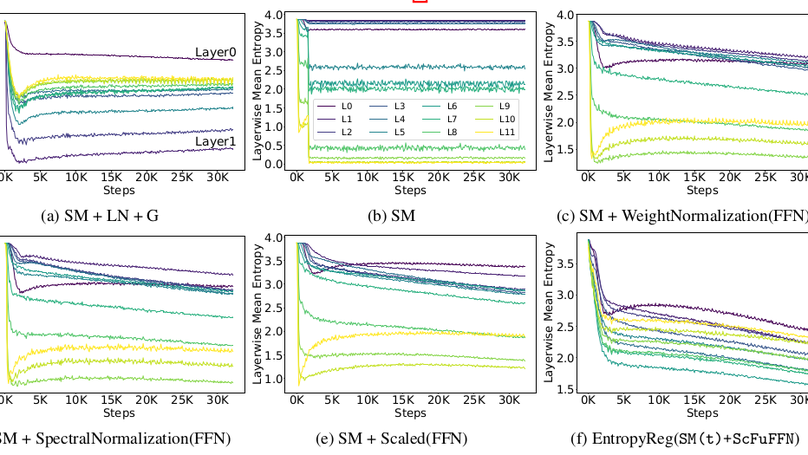

I also developed AERO, an information-theoretic framework that studies how nonlinearities influence entropy budgets of attention mechanisms and introduces entropy-guided attention for private LLM architectures with fewer nonlinear operations. Preliminary results appeared at PPAI@AAAI'25 and ATTRIB@NeurIPS'24.

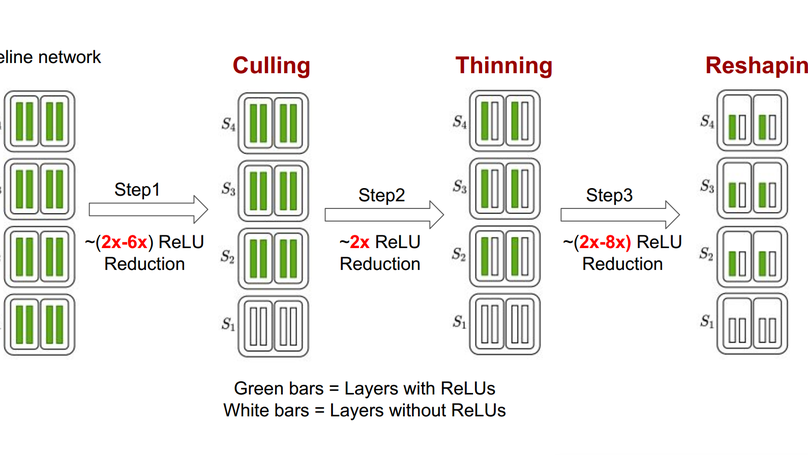

Earlier in my Ph.D., as part of the DPRIVE project, I proposed new architectures and algorithms for efficient inference on encrypted data. This includes DeepReDuce (ICML'21, Spotlight talk), a ReLU-optimization technique, and DeepReShape (TMLR'24), a family of CNNs redesigned for private inference efficieny. Both works redefined the state-of-the-arts in private inference, achieving 3.5× and 8.7× speedups over prior SOTA, respectively.

Recent talks: We presented our work Entopy and Private Language Models at the NYU CILVR Seminar, and Entropy-Guided Attention for Private LLMs on the AI Fireside Chat

Besides research, I have contributed as an (invited) reviewer for NeurIPS (2023-25), ICML (2024, 2025), ICLR (2024-26), TMLR (2025-), AISTATS (2025, 2026), CVPR (2024, 2025), ICCV (2025), and AAAI (2025).

- Representation Integrity in LLMs

- Architecture-Optimizer Co-design

- Nonlinear Representation Dynamics

- Cryptographically Secure PPML

Ph.D. in Electrical and Computer Engineering, 2020 - present

New York University

M.Tech. (Research) in Computer Science and Engineering, 2017 - 2020

Indian Institute of Technology Hyderabad

B.Tech. in Electronics and Communication Engineering, 2009 - 2013

National Institute of Technology Surat

News & Updates

Recent Highlights

- 2025-01 · AAAI’25 (PPAI Workshop) — Entropy-Guided Attention accepted. arXiv

- 2024-10 · NeurIPS’24 (ATTRIB) — Talk on ReLU in norm-free LLMs. arXiv [Slides]

- 2024-10 · Preprint — AERO: Softmax-only private LLMs with entropy regularization released. arXiv [Code]

- 2024-09 · TMLR — DeepReShape accepted (ReLU-equalization, HybReNets). arXiv

- 2023-03 · ASPLOS — End-to-end private inference system accepted. arXiv

- 2021-12 · NeurIPS — Circa accepted (GC + stochastic ReLUs). arXiv

- 2021-11 · Preprint — CryptoNite: throughput limits under realistic load. arXiv

- 2021-11 · ACM CCS (PPML) — Sisyphus accepted (Quadratic Imitation Learning). arXiv

- 2021-07 · ICML (Spotlight) — DeepReDuce: criticality-based ReLU dropping. arXiv

See older updates on the All News page.

Featured Publications

We introduce an information-theoretic framework to characterize the role of nonlinearities in decoder-only language models, laying a principled foundation for optimizing transformer-architectures tailored to the demands of Private Inference (PI). By leveraging Shannon’s entropy as a quantitative measure, we uncover the previously unexplored dual significance of nonlinearities, beyond ensuring training stability, they are crucial for maintaining attention head diversity. Specifically, we find that their removal triggers two critical failure modes, entropy collapse in deeper layers that destabilizes training, and entropic overload in earlier layers that leads to under-utilization of Multi-Head Attention’s (MHA) representational capacity. We propose an entropy-guided attention mechanism paired with a novel entropy regularization technique to mitigate entropic overload. Additionally, we explore inference-efficient alternatives to layer normalization for preventing entropy collapse and stabilizing the training of LLMs with reduced-nonlinearities. Our study bridges the gap between information theory and architectural design, establishing entropy dynamics as a principled guide for developing efficient PI architecture.

DeepReDuce is a set of optimizations for the judicious removal of ReLUs to reduce private inference latency by leveraging the ReLUs heterogeneity in classical networks. DeepReDuce strategically drops ReLUs upto 4.9x (on CIFAR-100) and 5.7x (on TinyImageNet) for ResNet18 with no loss in accuracy. Compared to the state-of-the-art for private inference DeepReDuce improves accuracy and reduces ReLU count by up to 3.5% (iso-ReLU) and 3.5×(iso-accuracy), respectively.

Recent Publications

Experience

Responsibilities include:

- Designing power delivery circuit for M.2 Solid State Drives.

- Electrical characterization of DRAM and NAND modules

- Signal Intergrity verification of DRAM/NAND datapath

Press & Media

Press Release

Cracking the code of private AI: The role of entropy in secure language models

NYU Tandon School of Engineering • March 2025

Team streamlines neural networks to be more adept at computing on encrypted data

NYU Tandon School of Engineering • June 2021

News

Random Matrix Analysis Reveals Capacity Bottlenecks in Transformer Multi-Head Attention

Quantum Zeitgeist • July 2025

Team streamlines neural networks to be more adept at computing on encrypted data

TechXplore • July 2021

Team streamlines neural networks to be more adept at computing on encrypted data

ScienceDaily • July 2021

Article

Making Private AI Practical: A Review of “Entropy-Guided Attention for Private LLM”

by Roma Shusterman, CTO at Brain Electrophysiological Laboratory (BEL) • March 2025

Contact

- nj2049@nyu.edu

- 1032-3, 10th floor, 370 jay street, Brooklyn, NY 11201

- Book an appointment

- DM Me