Roofline with the proposed DI

Roofline with the proposed DIAbstract

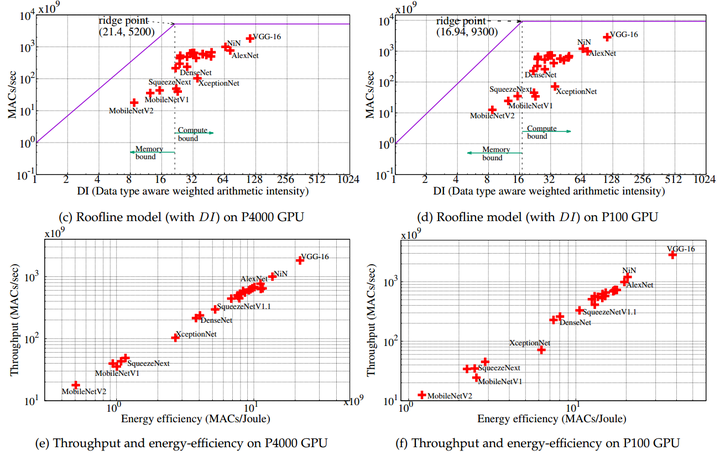

In recent years, researchers have focused on reducing the model size and number of computations (measured as “multiply-accumulate” or MAC operations) of DNNs. The energy consumption of a DNN depends on both the number of MAC operations and the energy efficiency of each MAC operation. The former can be estimated at design time, however, the latter depends on the intricate data reuse patterns and underlying hardware architecture and hence, estimating it at design time is challenging. In this work, we show that the conventional approach to estimate the data reuse, viz. arithmetic intensity, does not always correctly estimate the degree of data reuse in DNNs since it gives equal importance to all the data types. We propose a novel model, termed “data type aware weighted arithmetic intensity” (DI) which accounts for the unequal importance of different data types in DNNs. We evaluate our model on 25 state-of-the-art DNNs on two GPUs and show that our model accurately models data-reuse for all possible data reuse patterns, for different types of convolution and different types of layers. We show that our model is a better indicator of the energy efficiency of DNNs. We also show its generality using the central limit theorem.