simplification of ReLU operation in Garbled circuit

simplification of ReLU operation in Garbled circuitAbstract

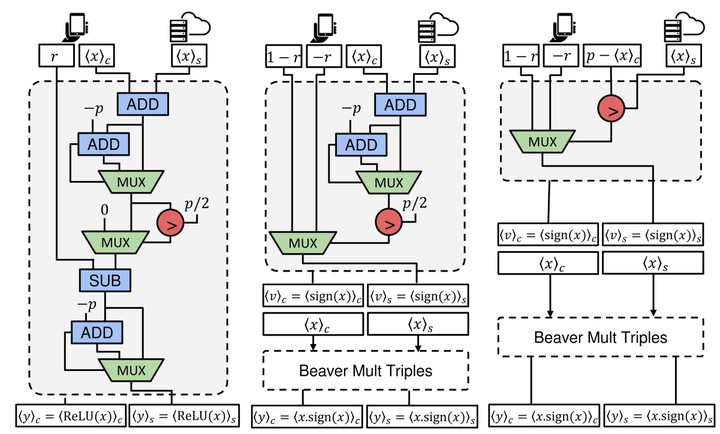

The simultaneous rise of machine learning as a service and concerns over user privacy have increasingly motivated the need for private inference (PI). While recent work demonstrates PI is possible using cryptographic primitives, the computational overheads render it impractical. The community is largely unprepared to addressthese overheads, as the source of slowdown in PI stems from the ReLU operator whereas optimizations for plaintext inference focus on optimizing FLOPs. In this paper we re-think the ReLU computation and propose optimizations for PI tailored to properties of neural networks. Specifically, we reformulate ReLU as anapproximate sign test and introduce a novel truncation method for the sign test that significantly reduces the cost per ReLU. These optimizations result in a specifictype of stochastic ReLU. The key observation is that the stochastic fault behavioris well suited for the fault-tolerant properties of neural network inference. Thus, we provide significant savings without impacting accuracy. We collectively call the optimizations Circa and demonstrate improvements of up to 4.7× storage and 3× runtime over baseline implementations; we further show that Circa can be used ontop of recent PI optimizations to obtain 1.8× additional speedup.